UMBC researchers develop better techniques to

render video game characters with realistic skin

Researchers at the University of Maryland, Baltimore County (UMBC) have developed a new solution to render an essential detail in many video games: human skin. The research is published in the Proceedings of the Association for Computing Machinery on Computer Graphics and Interactive Techniques [1]. Marc Olano, associate professor of computer science and electrical engineering at UMBC, led this research alongside Tiantian Xie, Ph.D. ’22, computer science. Xie, under the guidance of Olano, has worked with researchers Brian Karis and Krzysztof Narkowicz at the gaming company Epic Games, developing a keen understanding of gamers’ user experience, including the precise level of realism and detail that players are looking for in human characters.

Game developers seek to create visuals that are as realistic as possible without stepping into the “uncanny valley.” This term describes when the graphics in a game attempt to portray a human as closely as possible, and gets close to mimicking real life, but not quite close enough, in a way users find disturbing. This creates an unpleasant feeling in users that might distract from their enjoyment of the game.

In many games, human skin is rendered in such a way that it looks like a plastic object. This plastic look can occur because animators aren’t accounting for subsurface scattering – a key element of how light interacts with a textured 3D surface. Subsurface scattering is animators’ main priority when it comes to transforming skin from looking like plastic to looking truly real.

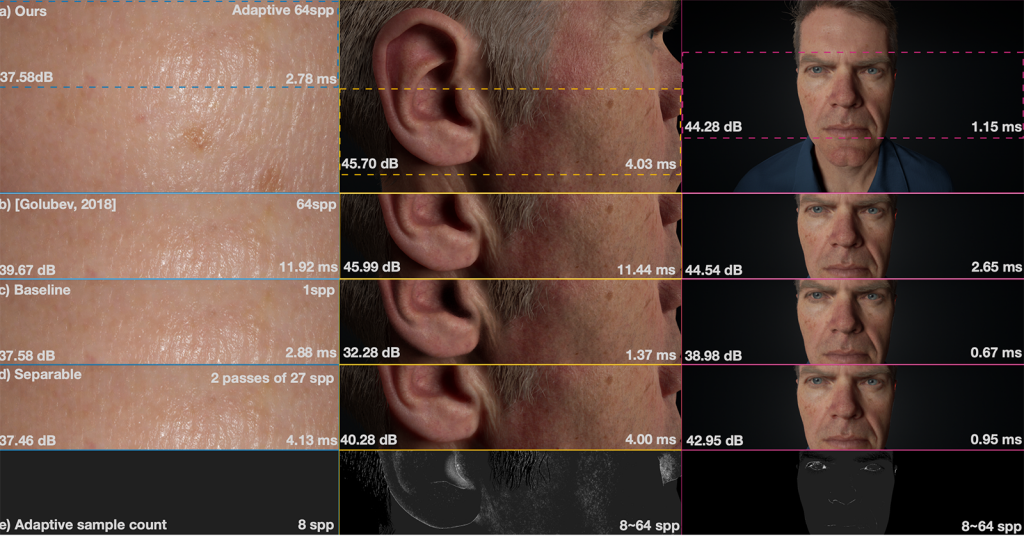

Olano’s method builds upon research developed by large gaming companies to create realistic depictions of human skin that will also load quickly within a gaming interface. “Our method adds an ability to adaptively estimate how many samples you actually need to get the look that you want without having to do a lot of additional computation to get a smooth image,” explains Olano.

The method minimizes the amount of computation needed to create photo-realistic images. Previous techniques were either not realistic enough, or ran too slowly for use in games, negatively affecting the gaming experience. The new method is based on techniques developed for offline film production rendering. Xie, the first author of the paper, states, “Offline rendering techniques are not suitable for real-time rendering because adding the technique itself in real-time introduces a large overhead. Our technique eliminates this overhead.”

Olano and his team created an algorithm to determine the pixels that would need to be rendered differently than the others due to light gradient change. Their sampling method uses temporal variance to lower the overall number of changes within each frame while still maintaining a realistic depiction of subsurface scattering. Since fewer changes are needed per frame, the method creates an efficient way of rendering realistic skin within the capabilities of today’s computing power.

The algorithm used by Olano’s team is built upon a foundation of research that is known and accessible to game developers. This offers a promising path for the gaming industry to pursue realism while maintaining an awareness of the computational ability of an average gaming system. Developers may be able to begin using this technique soon to create more realistic human figures in games, growing the gaming market even more.

[1] Tiantian Xie, Marc Olano, Brian Karis, and Krzysztof Narkowicz. 2020. Real-time subsurface scattering with single pass variance-guided adaptive importance sampling. Proc. ACM Comput. Graph. Interact. Tech. 3, 1, Article 3 (Apr 2020), 21 pages. DOI:https://doi.org/10.1145/3384536

Adapted from a press release written by Morgan Zepp that appeared in EurikAlert.