CSEE faculty receive NSF award to help robots learn tasks by interacting naturally with people

UMBC Assistant Professors Cynthia Matuszek (PI) and Francis Ferraro (Co-PI), along with senior staff scientist at JHU-APL John Winder (Co-PI) received a three-year NSF award as part of the National Robotics Initiative on Ubiquitous Collaborative Robots. The award for Semi-Supervised Deep Learning for Domain Adaptation in Robotic Language Acquisition will advance the ability of robots to learn from interactions with people using spoken language and gestures in a variety of situations.

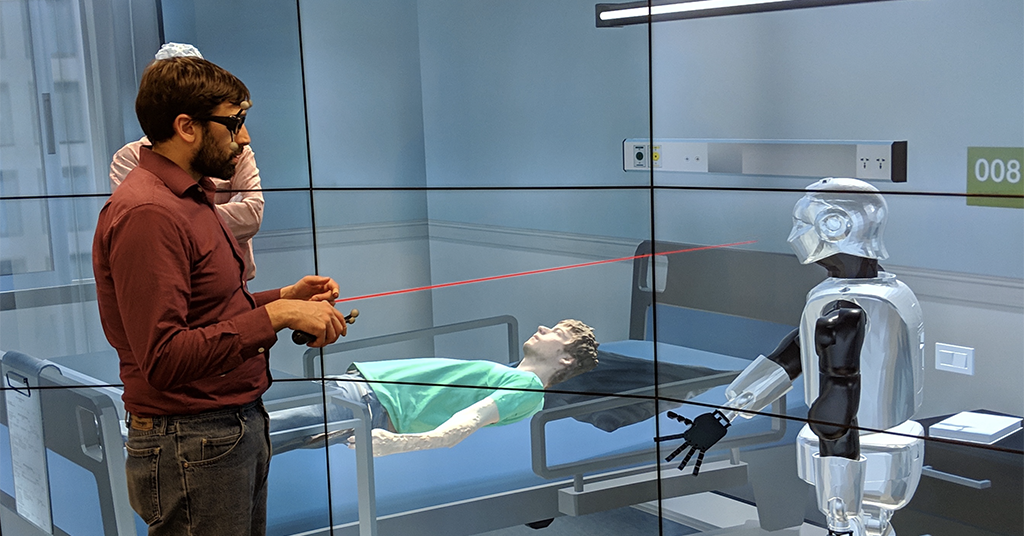

This project will enable robots to learn to perform tasks with human teammates from language and other modalities, and then transfer what they have learned to other robots with different capabilities in order to perform different tasks. This will ultimately allow human-robot teaming in domains where people use varied language and instructions to complete complex tasks. As robots become more capable and ubiquitous, they are increasingly moving into complex, human-centric environments such as workplaces and homes.

Being able to deploy useful robots in settings where human specialists are stretched thin, such as assistive technology, elder care, and education, has the potential to have far-reaching impacts on human quality of life. Achieving this will require the development of robots that learn, from natural interaction, about an end user’s goals and environment.

This work is intended to make robots more accessible and usable for non-specialists. In order to verify success and involve the broader community, tasks will be drawn from and tested in community Makerspaces, which are strongly linked with both education and community involvement. It will address how collaborative learning and successful performance during human-robot interactions can be accomplished by learning from and acting on grounded language. To accomplish this, the project will revolve around learning structured representations of abstract knowledge with goal-directed task completion, grounded in a physical context.

There are three high-level research thrusts: leverage grounded language learning from many sources, capture and represent the expectations implied by language, and use deep hierarchical reinforcement learning to transfer learned knowledge to related tasks and skills. In the first, new perceptual models to learn an alignment among a robot’s multiple, heterogeneous sensor and data streams will be developed. In the second, synchronous grounded language models will be developed to better capture both general linguistic and implicit contextual expectations that are needed for completing shared tasks. In the third, a deep reinforcement learning framework will be developed that can leverage the advances achieved by the first two thrusts, allowing the development of techniques for learning conceptual knowledge. Taken together, these advances will allow an agent to achieve domain adaptation, improve its behaviors in new environments, and transfer conceptual knowledge among robotic agents.

The research award will support both faculty and students working in the Interactive Robotics and Language lab on this task. It includes an education and outreach plan designed to increase participation by and retention of women and underrepresented minorities (URM) in robotics and computing, engaging with UMBC’s large URM population and world-class programs in this area.