New NSF project will help robots learn to understand humans in complex environments

UMBC Assistant Professor Cynthia Matuszek is the PI on a new NSF research award, EAGER: Learning Language in Simulation for Real Robot Interaction, with CO-PIs Don Engel and Frank Ferraro. Research funded by this award will be focused on developing better human-robot interactions using machine learning to enable robots to learn the meaning of human commands and questions informed by their physical context.

While robots are rapidly becoming more capable and ubiquitous, their utility is still severely limited by the inability of regular users to customize their behaviors. This EArly Grant for Exploratory Research (EAGER) will explore how examples of language, gaze, and other communications can be collected from a virtual interaction with a robot in order to learn how robots can interact better with end users. Current robots’ difficulty of use and inflexibility are major factors preventing them from being more broadly available to populations that might benefit, such as aging-in-place seniors. One promising solution is to let users control and teach robots with natural language, an intuitive and comfortable mechanism. This has led to active research in the area of grounded language acquisition: learning language that refers to and is informed by the physical world. Given the complexity of robotic systems, there is growing interest in approaches that take advantage of the latest in virtual reality technology, which can lower the barrier of entry to this research.

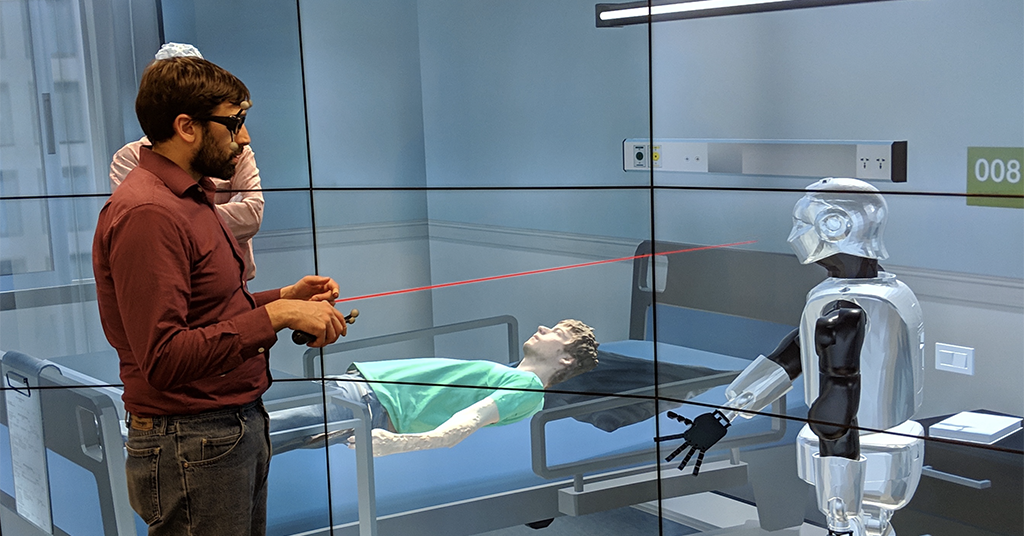

This EAGER project develops infrastructure that will lay the necessary groundwork for applying simulation-to-reality approaches to natural language interactions with robots. This project aims to bootstrap robots’ learning to understand language, using a combination of data collected in a high-fidelity virtual reality environment with simulated robots and real-world testing on physical robots. A person will interact with simulated robots in virtual reality, and his or her actions and language will be recorded. By integrating with existing robotics technology, this project will model the connection between the language people use and the robot’s perceptions and actions. Natural language descriptions of what is happening in simulation will be obtained and used to train a joint model of language and simulated percepts as a way to learn grounded language. The effectiveness of the framework and algorithms will be measured on automatic prediction/generation tasks and transferability of learned models to a real, physical robot. This work will serve as a proof of concept for the value of combining robotics simulation with human interaction, as well as providing interested researchers with resources to bootstrap their own work.

Dr. Matuszek’s Interactive Robotics and Language lab is developing robots that everyday people can talk to, telling them to do tasks or about the world around them. Their approach to learning to understand language in the physical space that people and robots occupy is called grounded language acquisition and is a key to building robots that can perform tasks in noisy, real-world environments, instead of being pre-emptively programmed to handle a fixed set of predetermined tasks.