Computer Science and Electrical Engineering

University of Maryland, Baltimore County

Ph.D. Dissertation Defense

Multiple Objective Task Scheduling

for Scalable High Performance Computing

Tyler A. Simon

12:30-2:30 Friday, 8 November 2013, ITE 325b

Individual processor frequencies have reached an upper physical and practical limit. Processor designs now involve adding multiple processing elements to a single chip to enable increased performance. It is expected that all future processor designs will have multiple cores with a focus on reducing frequencies and adding more individual processing elements (cores) while having to balance power consumption, reliability and maintain high performance.

Due to the increased complexity as well as increased heterogeneity of parallel architectures, petascale and future exascale systems, with the number of processors on the order of 10^8-10^9, must incorporate more intelligent software tools that help manage parallel execution for the user. I demonstrate that by managing the parallel execution environment at runtime, we can satisfy performance tradeoffs for a particular application or application domain for a set of common HPC architectures. It is expected that future exascale computing systems will have to execute programs on many individual and potentially low powered processing elements. These processors need to be fed data efficiently and reliably through the duration of a parallel computation.

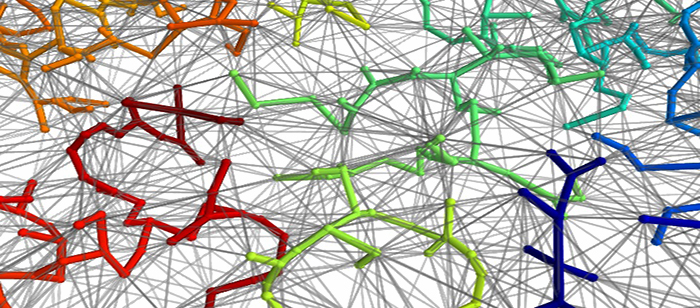

In this thesis I provide a performance analysis of two common graph algorithms for finding a minimum spanning tree and evaluate the multicore performance of a common high performance computing (HPC) benchmark on multicore processors. I also develop a novel autonomic execution model and adaptive runtime system (ARRIA) Adaptive Runtime Resource for Intensive Applications. ARRIA is designed with the intent of improving application programmability, scalability and performance while freeing the programmer from explicit message passing and thread management. Experiments are conducted that evaluate ARRIA’s capabilities on data intensive applications, those where the majority of execution time is spent reading and writing either to local or remote memory locations. In my approach, I focus on developing task schedules that satisfy multiple objectives for clusters of compute nodes during runtime. This approach is novel in that it can control application performance and satisfy constraints that are solved using multi objective optimization techniques as the program runs. The development and implementation of the ARRIA runtime system and subsequent optimization criteria likely provide reasonable models for the exascale computing era.

The results of this dissertation demonstrate, experimentally, that for high performance computing systems, a dynamic, task based, parallel programming environment and scheduler can provide lower total workload runtimes and high utilization compared with commonly used static scheduling policies.