Professors Nilanjan Banerjee and Ryan Robucci have received more than $1.5M to date in funding from the US Army Research Office to support their research with the US Army Research Laboratory on developing intelligent human-AI collaborative teams for DoD applications. The expectation is that such teams can draw on the strengths of both people and AI systems and achieve better results than human-only and machine-only teams. Their work explores one of the keys to the success of such collaborative systems — the AI system’s ability to understand and adapt to their human teammates.

For many tasks, the emotional and cognitive states of people are important properties to which automated systems must adapt. One of the challenges in exploring this paradigm is the development of methods as well as real-time human in-the-loop hardware and software platforms for experimentation. Current measures for human state in automation primarily rely on self-reporting questionnaires, which are not real-time and may be unreliable, providing a poor basis for an AI to modulate its response to the humans. The UMBC researchers have been working with the US Army Research Laboratory to advance this area by examining the hypothesis that physiological measures like heart rate variability acquired with wearables can infer human state such as stress, which can be used to modulate information presented to them by computing systems

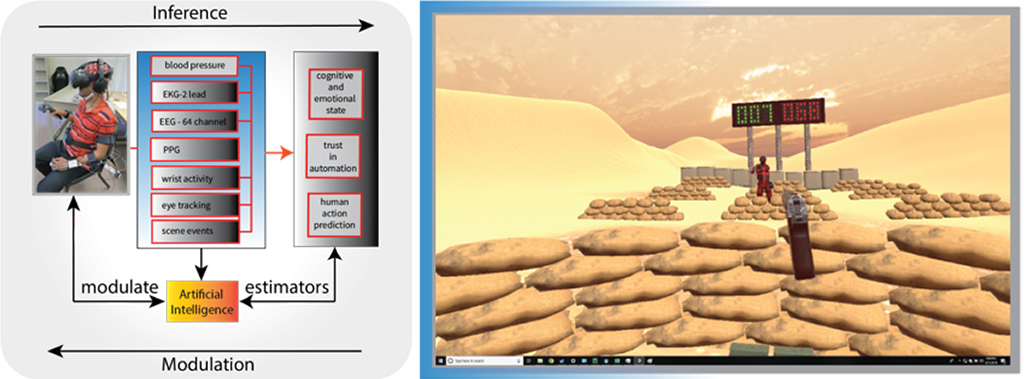

Their approach uses real-time models and systems built at UMBC that infer human state using physiological measures collected from wearables with behaviorally relevant design features. The AI that implements these models will modulate the data presented to the operator as a function of stress.

They are exploring and evaluating the approach using a simple virtual-reality task simulation that uses real-time models to infer human-AI trust and human state using psychophysiological measures collected from wearable sensors (EEG, EKG, and activity). The system modulates information and recommendations made to humans (or human teams) based on human-AI trust, human state, and application-specific scene information.